CAT3D: Create Anything in 3D with Multi-View Diffusion Models

TL;DR: Create 3D scenes from any number of real or generated images.

How it works

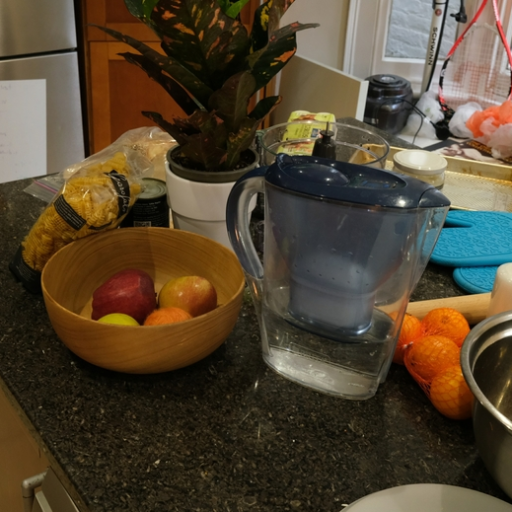

Given any number of input images, we use a multi-view diffusion model conditioned on those images to generate novel views of the scene. The resulting views are fed to a robust 3D reconstruction pipeline, producing a 3D representation that can be rendered interactively. The total processing time (including both view generation and 3D reconstruction) runs in as little as one minute.

Interactive Results

Explore our 3D scenes, converted to Gaussian Splats.Comparisons to other methods

Compare the renders and depth maps of our method CAT3D (right) with baseline methods (left). Try selecting different methods and scenes!

Method overview

Acknowledgements

We would like to thank Daniel Watson, Rundi Wu, Richard Tucker, Jason Baldridge, Michael Niemeyer, Rick

Szeliski, Dana Roth, Jordi Pont-Tuset, Andeep Torr, Irina Blok, Doug Eck, and Henna Nandwani for their valuable

contributions to this work. We also extend our gratitude to Shlomi Fruchter, Kevin Murphy, Mohammad Babaeizadeh, Han Zhang and Amir Hertz for training the base text-to-image latent diffusion model.